Emotion-Controllable Impression Utterance Generation for Visual Art

Ryo Ueda, Hiromi Narimatsu, Yusuke Miyao and Shiro Kumano, in Proc. International Conference on Affective Computing and Intelligent Interaction (ACII 2023), pp. 1-8, 2023.

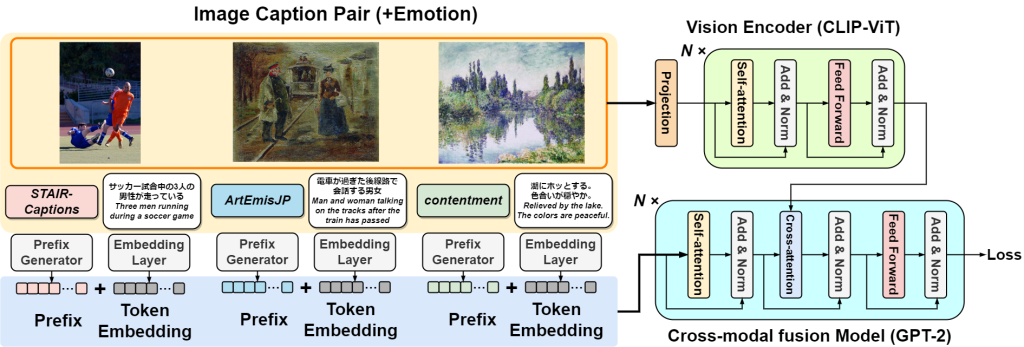

The degree of subjectivity and the type of emotions when people express their impressions of an object depend on various factors, including their affective states, psychological traits, goals, and social norms. However, most previous efforts in generating people’s impressions of objects have focused on extreme cases, i.e., fully objective or fully emotional, as represented by the MS-COCO and ArtEmis datasets, respectively. We propose an emotional impression generation method that continuously controls the degree of subjectivity versus objectivity and the type of emotion in a unified manner. In the framework of ConCap, which allows controlling text style by modifying an auxiliary input called prefix, we propose to use two types of prefixes to jointly control the degree of subjectivity and the type of emotion: subjective-style prefix and categorical-emotional-style prefix. The subjective-style prefixes are holistic and attempt to describe the entire emotion felt by a group of people, although different individuals may feel different emotions. The categoricalemotional- style prefix is more individual-oriented and tries to focus on a single or few specific emotions. An experiment using both objectively and subjectively descriptive datasets shows qualitatively and quantitatively that the proposed method provides good gradual control of impression expressions of visual art in terms of both subjectivity and objectivity as well as emotion categories.

This is a collaborative work with Miyao Lab. at the University of Tokyo.