Consistent Smile Intensity Estimation from Wearable Optical Sensors

Katsutoshi Masai, Monica Perusquıa-Hernandez, Maki Sugimoto, Shiro Kumano and Toshitaka Kimura

International Conference on Affective Computing & Intelligent Interaction (ACII 2022)

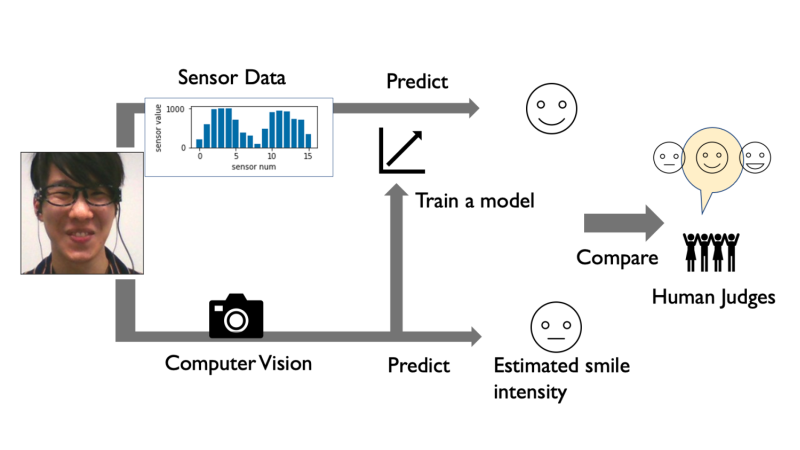

Smiling plays a crucial role in human communication. It is the most frequent expression shown in daily life. Smile analysis usually employs computer vision-based methods that use data sets annotated by experts. However, cameras have space constraints in most realistic scenarios due to occlusions. Wearable electromyography is a promising alternative; however, issue of user comfort is a barrier to long-term use. Other wearable-based methods can detect smiles, but they lack consistency because they use subjective criteria without expert annotation. We investigate a wearable-based method that uses optical sensors for consistent smile intensity estimation while reducing manual annotation cost. First, we use a state-of-art computer vision method (OpenFace) to train a regression model to estimate smile intensity from sensor data. Then, we compare the estimation result to that of OpenFace. We also compared their results to human annotation. The results show that the wearable method has a higher matching coefficient (r=0.67) with human annotated smile intensity than OpenFace (r=0.56). Also, when the sensor data and OpenFace output were fused, the multimodal method produced estimates closer to human annotation (r=0.74). Finally, we investigate how the synchrony of smile dynamics among subjects and their average smile intensity are correlated to assess the potential of wearable smile intensity estimation.