Gravity-Direction-Aware Joint Inter-Device Matching and Temporal Alignment between Camera and Wearable Sensors

Hiroyuki Ishihara & Shiro Kumano

ICMI ’20 Companion, 2020.

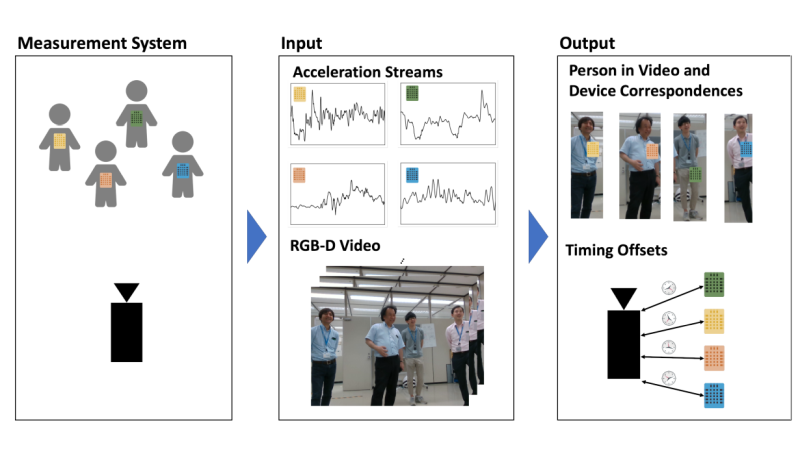

To analyze human interaction behavior in a group or crowd, identification and device time synchronization are essential but time demanding to be performed manually. To automate the two processes jointly without any calibration steps nor auxiliary sensor, this paper presents an acceleration-correlation-based method for multi-person interaction scenarios where each target person wears an accelerometer and a camera is stationed in the scene. A critical issue is how to remove the time-varying gravity direction component from wearable device acceleration, which degrades the correlation of body acceleration between the device and video, yet is hard to estimate accurately. Our basic idea is to estimate the gravity direction component in the camera coordinate system, which can be obtained analytically, and to add it to the vision-based data to compensate the degraded correlation. We got high accuracy results for 4 person-device matching with only 40 to 60 frames (4 to 6 seconds). The average timing offset estimation is about 5 frames (0.5 seconds). Experimental results suggest it is useful for analyzing individual trajectories and group dynamics at low frequencies.