Synergistic Functional Spectrum Analysis: A Framework for Exploring the Multifunctional Interplay Among Multimodal Nonverbal Behaviours in Conversations

Mai Imamura, Ayane Tashiro, Shiro Kumano and Kazuhiro Otsuka, IEEE Transactions on Affective Computing, PrePrints pp. 1-18. (doi:10.1109/TAFFC.2024.3491097)

ABSTRACT

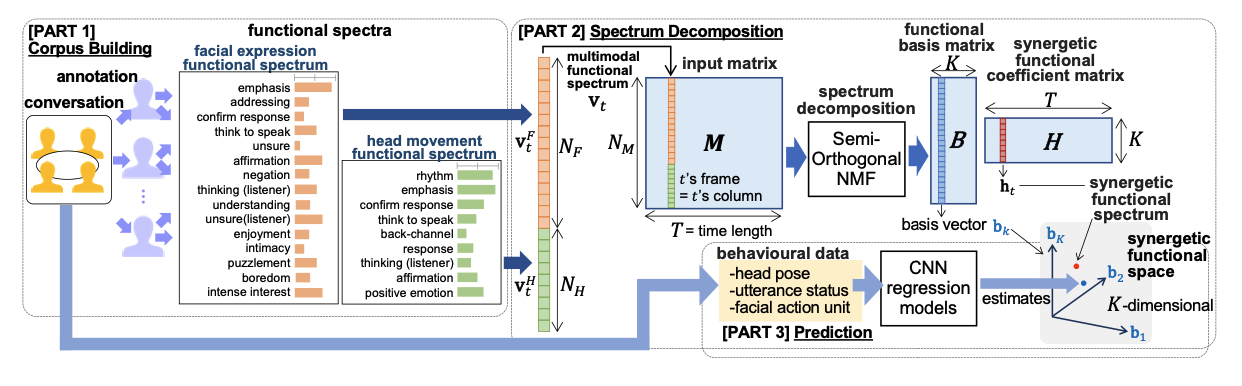

A novel framework named the synergistic functional spectrum analysis (sFSA) is proposed to explore the multifunctional interplay among multimodal nonverbal behaviours in human conversations. This study aims to reveal how multimodal nonverbal behaviours cooperatively perform communicative functions in conversations. To capture the intrinsic nature of nonverbal expressions, functional multiplicity, and interpretational ambiguity, e.g., a single head nod could imply listening, agreeing, or both, a novel concept named the functional spectrum, which is defined as the distribution of perceptual intensities of multiple functions by multiple observers, is introduced in the sFSA. Based on this concept, this paper presents functional spectrum corpora, which target 44 facial expression and 32 head movement functions. Then, spectrum decomposition is conducted to reduce the multimodal functional spectrum to a synergetic functional spectrum in a lower dimension functional space that is spanned by functional basis vectors representing primary and distinctive functionalities across multiple modalities. To that end, we propose a semiorthogonal nonnegative matrix factorization (SO-NMF) method, which assumes the additivity of multiple functions and aims to balance the distinctiveness and expressiveness of the factorization. The results confirm that some primary functional bases can be identified, which can be interpreted as the listener’s backchannel, thinking, and affirmative response functions, and the speaker’s thinking and addressing functions, and their positive emotion functions. In addition, regression models based on convolutional neural networks (CNNs) are presented to estimate the synergistic functional spectrum from the head poses and facial action units measured from conversation data. The results of these analyses and experiments confirm the potential of the sFSA and may lead to future extensions.

This is a collaborative work with Otsuka Lab. at Yokohama National University.